Machine Learning, Videogames, and Mechanical Turks

As a very passionate gamer and a Machine Learning researcher, this is a post that I definitely couldn't help writing 😁

Nowadays AI, and by extension Machine Learning, is common in several areas of game design. For example games like Minecraft and No Man's Sky successfully apply procedural AI to create entire worlds, and Machine Learning is heavily used in graphics: the Nvidia DLSS technology allows games to render images at lower resolutions (say, 1080p) and scales them up with Machine Learning before outputting them at 4K, achieving better quality and higher framerates with an overall lighter GPU workload.

In this post, however, I want to discuss specifically how AI is used to model the behavior of NPCs (non-playable characters). Videogames almost always simulate scenarios where one or multiple agents cooperate or compete to achieve certain goals, so controlling NPCs is intuitively the first task that comes to mind when mentioning AI in game design.

AI and Games: an Age-Old Love Story

The idea of gaming AIs capable of outwitting humans has fascinated people for ages. In the 18th century the “Turk” chess automaton achieved worldwide fame for beating the likes of Napoleon Bonaparte and Benjamin Franklin; it was actually a hoax (a human secretly operated the automaton from the inside), but the idea of a Verne-esque machine smart enough to defeat humans captivated people immensely - and it still does.

Since the 1950s, computer scientists have been applying AI to board games with increasingly impressive results. In 1997 the IBM DeepBlue model famously defeated the chess champion Garry Kasparov: it was the first time a reigning champion was beaten at an intellectual task by an AI. The recent rise of Machine Learning has allowed AIs to tackle the harder game of Go: in 2015 the DeepMind AlphaGo model beat the Go champion Lee Sedol, with Sedol himself recently claiming that AIs have become "an entity that cannot be defeated".

I believe that the reason why AI in games is so appealing is that games provide a fictional setting in which both people and AI agents are restricted to the same set of rules and actions. This scenario facilitates the illusion of interacting with an artificial human-like intelligence, i.e., a general AI, and allows the players to fully immerse in the game.

Given this premise, Machine Learning should be ubiquitous in videogames, which, being natively digital, provide the ideal environment for AI agents... right?

Does the Game Industry Use Machine Learning?

Unfortunately, nowadays Machine Learning is mostly not employed in videogames. Game developers rather prefer traditional AI techniques such as Pathfinding, Finite State Machines and Behavior Trees.

Pathfinding

Pathfinding studies the best way to move from a point A to a point B; most of them are heavily based on graph traversal algorithms, such as Dijkstra or A*. Pathfinding algorithms are used in almost all games where agents act on a map, (especially if made of tiles): for example, 2D strategic games such as Age of Empires, MMORPGs such as World of Warcraft, and first person shooters such as Half-Life and Counter-Strike.

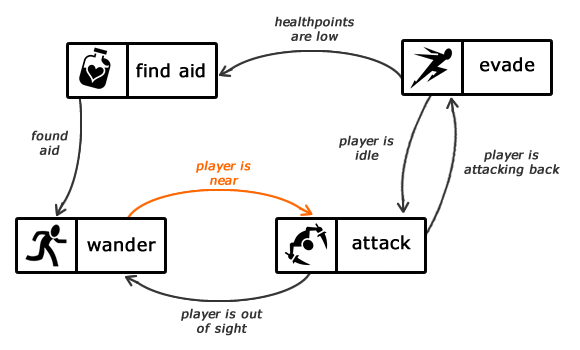

Finite State Machines (FSM)

Finite State Machines (FSMs) define all the situations (states) that AI agents can encounter, and script the corresponding reactions. For instance if the health value of an agent falls below a threshold, this may trigger the reaction "heal yourself"; applying it may switch the FSM another state, which in turn will elicit a new reaction, and so on, resulting in an infinite decision-making loop. FSMs are great at modeling simple behaviors, and they have been used a lot in gaming, ranging from the ghosts of Pac-man to the NPCs of Call of Duty, Metal Gear Solid, Halo and The Elder Scrolls (e.g., Skyrim).

A simple schema for a FSM (source: gamedevelopment.tutsplus).

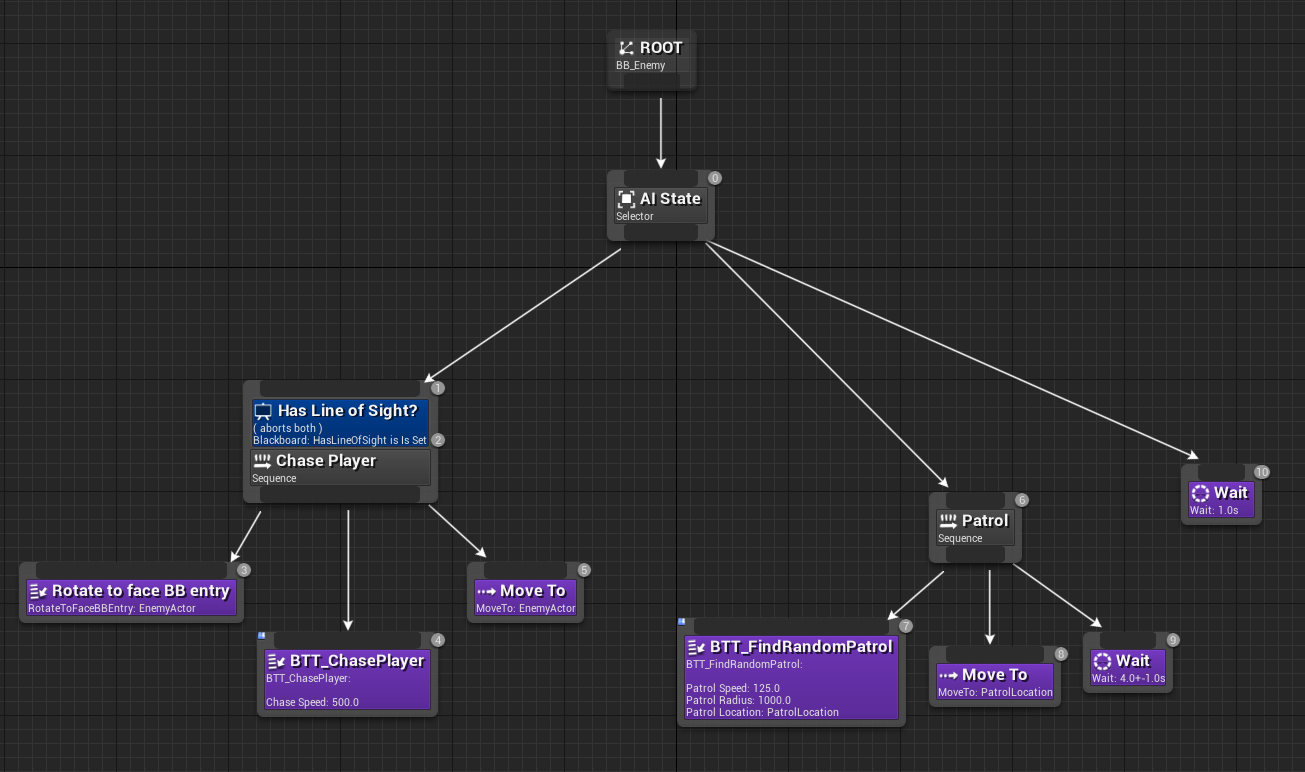

Behavior Trees

When AI agents need to choose the best among many possibile actions, Behavior Trees are usually a better solution than FSMs. Behavior Trees are similar to flow-charts: possible conditions are represented as tree branches, and possible actions are the tree leafs. The tree is evaluated at regular intervals, identifying the best action to apply. If the tree is too large to visit it entirely, algorithms such as the Monte Carlo Search Tree (MCST) can provide estimates of the payback of each action. Behavior Trees are very common in strategic turn-based games, such as Civilization, Heroes of Might and Magic, and Pokémon.

An example of behavior tree in the Unreal Engine 4 framework documentation.

Does the Game Industry Need Machine Learning?

All in all, while many AI-based tasks have shifted towards Machine Learning in the last decade, the game industry has not undergone the same process: devs have generally just refined and enhanced the same technologies with no radical changes.

I think the main reason behind this lies in what the actual purpose of games is. Similarly to other medias, such as movies or books, games build a narrative pact between developers and players: even the simplest game, through its context and rules, tells a story that the players unconsciously accept to believe. In this context AI agents are not meant to be strong: rather, they should be plausible, to sustain the suspension of disbelief.

Therefore the goal of AI in gaming is not to create smart agents, but rather to provide the illusion of smart agents. Game designers themselves claim that AI in games "is the equivalent of smoke and mirrors". Interestingly, this is the same philosophy behind the "Turk" hoax: the most important thing was, and still is, the experience felt by the player rather than the actual intelligence of the machine. I find these considerations quite interesting: out of the dozens of fields where AI is currently employed, they mostly only apply to games due to their inherent nature of medias.

This explains why the game industry seem so reluctant to adopt Machine Learning: given the purpose of AI in gaming, it may be useless or even counter-productive. In addition to that, of course, Machine Learning is not actually devoid of issues:

- Predictability : Machine Learning agents may behave inconsistently, e.g., they may alternate smart actions with idiotic ones, or display certain flaws only under specific conditions never met in development. The opacity of Machine Learning models would make it very hard for game designers to identify these behaviors and correct them.

- Development Processes: in most games the development is ruled by very tight schedules. Spending months (or years!) to build from scratch a whole new AI engine based on Machine Learning sounds like a bad move when you can just re-use pre-existing technologies with incremental refinements.

- Computation: Machine Learning models, unless they are really small and simple, generally need to run on GPUs. In videogames, though, GPUs are already busy with the game graphics, so they may not be able to handle the behavior of AI agents too (especially if there are too many of them).

However, I do not think that any of these issues is truly blocking: as a matter of fact a few experimental games in the past have already overcome them, using neural networks for specific tasks, e.g., Peter Molyneux's Black and White in the early 2000s.

Will the Game Industry Need Machine Learning?

If in the present Machine Learning and Game Industry do not look like a well-matched pair, the same may not apply for the future. Researchers have already run impressive (and extremely cool) experiments proving that Machine Learning technically can be applied to videogames with amazing results:

- OpenAI have developed an OpenAI Five model capable of playing Dota 2, a very popular MOBA where players clash in 5 vs 5 matches. OpenAI Five, which is based on self-play reinforcement learning, has spent about 10 months in a custom distributed training process; after that, it has defeated the Dota 2 world champions on a livestream event, thus becoming the first AI to beat the world champions in an esports game.

- DeepMind have developed an AlphaStar model to play the famous Real Time Strategic (RTS) game Starcraft 2. In its latest iteration AlphaStar is limited to the same constraints as humans (e.g., viewing the game world through a camera, performing actions with a limited frequency, etc.); it has ranked above 99.8% of active players in the official server battle.net, achieving the grandmaster class in all the three races (Protoss, Terran, Zerg) of the game.

These results prove that Machine Learning can indeed be applied to videogame agents with formidable results. The technology is here, and it is mature: studios could probably adopt it right away if it provided some added value in the greater picture of the game purposes. I feel that the only missing piece is a scenario where Machine Learning enables new gameplay possibilities would not be achievable otherwise; for instance, I would love to battle adaptive NPCs that learn in real time to counter the style and strategies of human players.

I can definitely see indie devs, or the most authorial game designers (e.g., Hideo Kojima, or the already mentioned Peter Molyneux) acting as pioneers in this field, and being later followed by more mainstream productions.

Until then, I can only keep dreaming of a neural-powered Ganondorf 😭

Thank you for reading this far!

As usual I'll leave here some additional sources:

- A nice article by Laura Maass on the current state of AI in gaming;

- A scientific survey by Kun Shao et al. on how reinforcement learning has been used so far on games in research projects.

Have a nice day!